The U.Okay.’s Nationwide Cyber Safety Centre has launched a brand new research that finds generative AI might enhance dangers from cyber threats comparable to ransomware.

Total, the report discovered that generative AI will present “functionality uplift” to current threats versus being a supply of brand name new threats. Menace actors will have to be refined sufficient to realize entry to “high quality coaching knowledge, important experience (in each AI and cyber), and assets” earlier than they will benefit from generative AI, which the NCSC mentioned will not be prone to happen till 2025. Menace actors “will have the ability to analyse exfiltrated knowledge sooner and extra successfully, and use it to coach AI fashions” going ahead.

How generative AI might ‘uplift’ assaults

“We should be certain that we each harness AI know-how for its huge potential and handle its dangers – together with its implications on the cyber menace,” wrote NCSC CEO Lindy Cameron in a press launch. “The emergent use of AI in cyber assaults is evolutionary not revolutionary, that means that it enhances current threats like ransomware however doesn’t rework the chance panorama within the close to time period.”

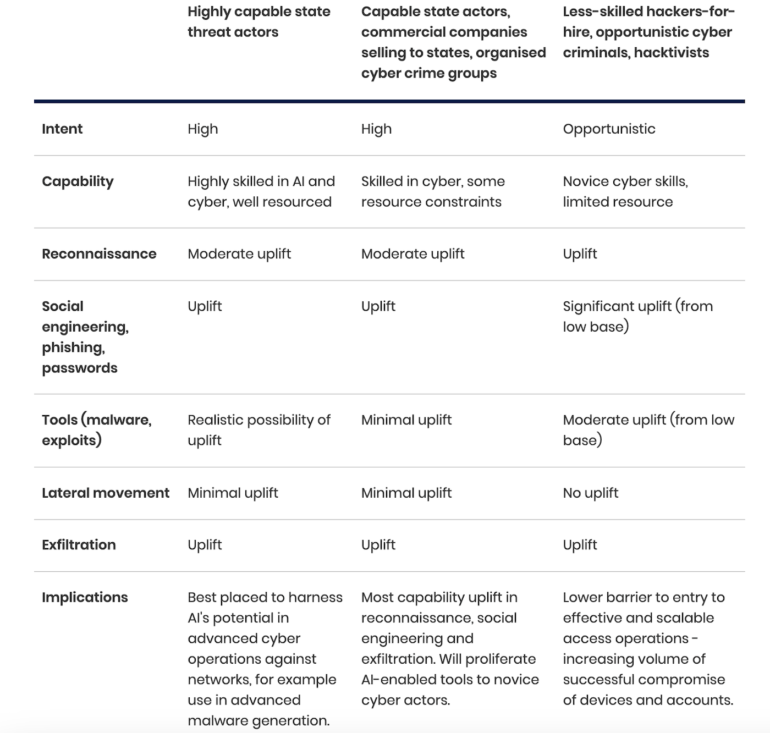

The report sorted threats (Determine A) by potential for “uplift” from generative AI and by the varieties of menace actors: nation-state sponsored, well-organized and less-skilled or opportunistic attackers.

Determine A

The generative AI menace extending to 2025 comes from “evolution and enhancement of current techniques, strategies and procedures,” not brand-new ones, the report discovered.

AI companies decrease the barrier to entry for ransomware attackers

Ransomware is anticipated to proceed to be a dominant type of cyber crime, the report mentioned. Equally to how attackers provide ransomware-as-a-service, they now provide generative AI-as-a-service as effectively, the report mentioned.

SEE: A current malware botnet snags cloud credentials from AWS, Microsoft Azure and extra (TechRepublic)

“AI companies decrease boundaries to entry, rising the variety of cyber criminals, and can increase their functionality by bettering the size, velocity and effectiveness of current assault strategies,” acknowledged James Babbage, director normal for threats on the Nationwide Crime Company, as quoted within the NCSC’s press launch concerning the research.

Ransomware actors are already utilizing generative AI for reconnaissance, phishing and coding, a development that the NCSC expects to proceed “to 2025 and past.”

Social engineering may be facilitated by AI

Social engineering will see plenty of uplift from generative AI over the following two years, the survey discovered. For instance, generative AI will have the ability to take away the spelling and grammar errors that always mark spam messages. In any case, generative AI can create new content material for attackers and defenders.

Phishing and malware attackers might use AI – however solely refined ones are prone to have it

Equally, menace actors can use generative AI to realize entry to accounts or password info in the midst of a phishing assault. Nevertheless, it’s going to take superior menace actors to make use of generative AI for malware, the report mentioned. With the intention to create malware that may evade right this moment’s safety filters, a generative AI would have to be skilled on massive quantities of high-quality exploit knowledge. The one teams prone to have entry to that knowledge right this moment are nation-state actors, however the report mentioned there’s a “lifelike chance” that such repositories exist.

Vulnerabilities might come at a sooner tempo as a consequence of AI

Community managers seeking to patch vulnerabilities earlier than they’re exploited might discover their jobs changing into tougher as generative AI accelerates the time between vulnerabilities being recognized and exploited.

How defenders can use generative AI

The NCSC identified that a number of the advantages generative AI supplies to cyberattackers can profit defenders as effectively. Generative AI may also help discover patterns to hurry up the time it takes to detect or triage assaults and determine malicious emails or phishing campaigns.

With the intention to enhance world defenses towards attackers utilizing generative AI, the UK organized the creation of the Bletchley Declaration in November 2023 as a suggestion for addressing forward-looking AI danger.

The NCSC and a few UK personal business organizations have adopted AI for improved menace detection and security-by-design underneath the £2.6 billion ($3.3 billion) Cyber Safety Technique introduced in 2022.